Fortunately I received an email from the Medicine's History Museum of Rio Grande do Sul (MUHM), that needed a forensic facial reconstrucion.

The nickname of the skeleton is Joaquim. He was a prisoner that died like a indigent in France in 1920. In 2006 he was donated to the museum by a family of doctors.

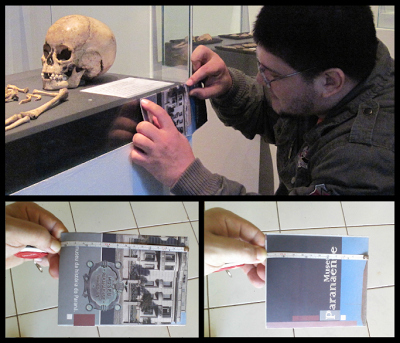

I ordered a CT-Scan and the people of the museum sent me not only the head, but all Joaquim's body.

So, I'll reconstruct all the body, but for now only the head was done.

To reconstruct the bones in 3D I used InVesalius, a CT-Scan reader open source. It was necessary export some files with different configurations, because the amount of data is huge.

Like I said, in this first part of Joaquim Project I'll reconstruct only the face. In the Meshlab I cleaned the noise of 3D reconstruction of CT-Scan.

The skull was not complete. To get the mandible I made a projection using Sassouni/Krogman method shown in Karen T. Taylor's book.

With the help of forensic dentist Dr. Paulo Miamoto, we get the range of Joaquim's age: 30-50.

The tissue depth markers was put.

So it was possible to sketch the profile of the face.

I don't know if Joaquim really was born in France, but he appear a French man.

Thanks to:

Éverton Quevedo and Letícia Castro from MUHM.

A big hug and I see you in the next!