This year, thanks to Prof. Tiziano Camagna, we had the opportunity to prove our methodologies during a particular archaeological expedition, focused on the localization and documentation of the "devils boat".

This strange wreck consists in a small boat built by the Italian soldiers, the "Alpini" of the battalion "Edolo" (nicknamed the "Adamello devils"), during the World War 1, near the mountain hut J. Payer (as reported by the book of Luciano Viazzi "I diavoli dell'Adamello").

The mission was a derivation of the project "La foresta sommersa del lago di Tovel: alla scoperta di nuove figure professionali e nuove tecnologie al servizio della ricerca” ("The submerged forest of lake Tovel: discovering new professions and new technologies at the service of scientific research"), a didactic program conceived by Prof. Camagna for the high school Liceo Scientifico B. Russell of Cles (Trentino - Italy).

As already mentioned, the target of the expedition has been the small boat currently lying on the bottom of lake Mandrone (Trentino - Italy), previously localized by Prof. Camagna and later photographed during an exploration in 2004. The lake is located at 2450 meters above see level. For this reason, before involving the students into such a difficult underwater project, a preliminary mission has been accomplished, in order to check the general conditions and perform some basic operations. This first mission was directed by Prof. Camagna and supported by the archaeologists of Arc-Team (Alessandro Bezzi, Luca Bezzi, for underwater documentation, and Rupert Gietl, for GNSS/GPS localization and boat support), by the explorers of the Nautica Mare team (Massimiliano Canossa and Nicola Boninsegna) and by the experts of Witlab (Emanuele Rocco, Andrea Saiani, Simone Nascivera and Daniel Perghem).

The primary target of the first mission (26 and 27 August 2016) has been the localization of the boat, since it was not known the exact place where the wreck was laying. Once the boat has been re-discovered, all the necessary operations to georeference the site have been performed, so that the team of divers could concentrate on the correct archaeological documentation of the boat. Additionally to the objectives mentioned above, the mission has been an occasion to test for the first time on a real operating scenario the ArcheoROV, the Open hardware ROV which has been developed by Arc-Team and WitLab.

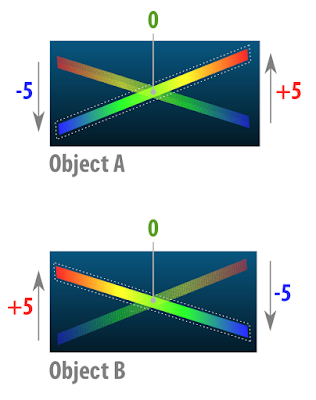

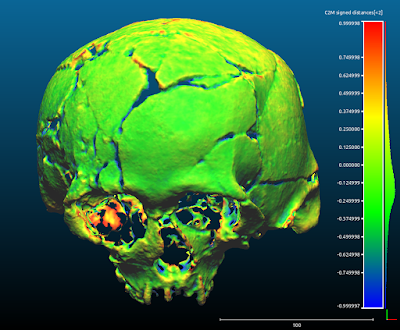

Target 1 has been achieved in a fast and easy way during the second day of mission (the first day was dedicated to the divers acclimation at 2450 meters a.s.l.), since the weather and environmental conditions were particularly good, so that the boat was visible from the lake shore. Target 2 has been reached positioning the GPS base station on a referenced point of the "Comitato Glaciologico Trentino" ("Galciological Committee of Trentino") and using the rover with an inflatable kayak to register some Control Points on the surface of the lake, connected through a reel with strategical points on the wreck. Target 3 has been completed collecting pictures for a post-mission 3D reconstruction through simple SfM techniques (already applied in underwater archaeology). The open source software used in post-processing are PPT and openMVG (for 3D reconstruction), MeshLab and CloudCompare (for mesh editing), MicMac (for the orthophoto) and QGIS (for archaeological drawing), all of them running on the (still) experimental new version of ArcheOS (Hypatia). Unlike what has been done in other projects, this time we preferred to recover original colours form underwater photos (to help SfM software in 3D reconstruction), using a series of command of the open source software suite Image Magick (soon I'll writ a post about this operation). Once completed the primary targets, the spared time of the first expedition has been dedicated to secondary objectives: teting the ArcheoROV (as mentioned before) with positive feedbacks, and the 3D documentation of the landscape surrounding the lake (to improve the free LIDAR model of the area).

What could not be foreseen for the first mission was serendipity: before emerging from the lake, the divers of Nautica Mare team (Nicola Boninsegna and Massimiliano Canossa) found a tree on the bottom of the lake. From an archaeological point of view it has been soon clear that this could be an import discovery, as the surrounding landscape (periglacial grasslands) was without wood (which is almost 200 meters below). The technicians of Arc-Team geolocated the trunk with the GPS, in order to perform a sampling during the second mission.

For this reason, the second mission changed its priority an has been focused on the recovering of core samples by drilling the submerged tree. Further analysis (performed by Mauro Bernabei, CNR-ivalsa) demonstrated that the tree was a Pinus cembra L. with the last ring dated back to 2931 B.C. (4947 years old). Nevertheless, the expedition has maintained its educational purpose, teaching the students of the Liceo Russell the basics of underwater archaeology and performing with them some test on a low-cost sonar, in order to map part of the lake bottom.

All the operations performed during the two underwater missions are summarized in the slides below, which come from the lesson I gave to the student in order to complete our didactic task at the Liceo B. Russell.

Aknowledgements

Prof. Tiziano Camagna (Liceo Scientifico B. Russell), for organizing the missions

Massimiliano Canossa and Nicola Boninsegna (Nautica Mare Team), for the professional support and for discovering the tree

Mauro Bernabei and the CNR-ivalsa, for analizing and dating the wood samples

The Galazzini family (tenants of the refuge “Città di Trento”), for the logistic support

The wildlife park “Adamello-Brenta” and the Department for Cultural Heritage of Trento (Office of Archaeological Heritage) for close cooperation

Last but not least, Dott. Stefano Agosti, Prof. Giovanni Widmann and the students of Liceo B. Russel: Borghesi daniele, Torresani Isabel, Corazzolla Gianluca, Marinolli Davide, Gervasi Federico, Panizza Anna, Calliari Matteo, Gasperi Massimo, Slanzi Marco, Crotti Leonardo, Pontara Nicola, Stanchina Riccardo

The primary target of the first mission (26 and 27 August 2016) has been the localization of the boat, since it was not known the exact place where the wreck was laying. Once the boat has been re-discovered, all the necessary operations to georeference the site have been performed, so that the team of divers could concentrate on the correct archaeological documentation of the boat. Additionally to the objectives mentioned above, the mission has been an occasion to test for the first time on a real operating scenario the ArcheoROV, the Open hardware ROV which has been developed by Arc-Team and WitLab.

Target 1 has been achieved in a fast and easy way during the second day of mission (the first day was dedicated to the divers acclimation at 2450 meters a.s.l.), since the weather and environmental conditions were particularly good, so that the boat was visible from the lake shore. Target 2 has been reached positioning the GPS base station on a referenced point of the "Comitato Glaciologico Trentino" ("Galciological Committee of Trentino") and using the rover with an inflatable kayak to register some Control Points on the surface of the lake, connected through a reel with strategical points on the wreck. Target 3 has been completed collecting pictures for a post-mission 3D reconstruction through simple SfM techniques (already applied in underwater archaeology). The open source software used in post-processing are PPT and openMVG (for 3D reconstruction), MeshLab and CloudCompare (for mesh editing), MicMac (for the orthophoto) and QGIS (for archaeological drawing), all of them running on the (still) experimental new version of ArcheOS (Hypatia). Unlike what has been done in other projects, this time we preferred to recover original colours form underwater photos (to help SfM software in 3D reconstruction), using a series of command of the open source software suite Image Magick (soon I'll writ a post about this operation). Once completed the primary targets, the spared time of the first expedition has been dedicated to secondary objectives: teting the ArcheoROV (as mentioned before) with positive feedbacks, and the 3D documentation of the landscape surrounding the lake (to improve the free LIDAR model of the area).

What could not be foreseen for the first mission was serendipity: before emerging from the lake, the divers of Nautica Mare team (Nicola Boninsegna and Massimiliano Canossa) found a tree on the bottom of the lake. From an archaeological point of view it has been soon clear that this could be an import discovery, as the surrounding landscape (periglacial grasslands) was without wood (which is almost 200 meters below). The technicians of Arc-Team geolocated the trunk with the GPS, in order to perform a sampling during the second mission.

For this reason, the second mission changed its priority an has been focused on the recovering of core samples by drilling the submerged tree. Further analysis (performed by Mauro Bernabei, CNR-ivalsa) demonstrated that the tree was a Pinus cembra L. with the last ring dated back to 2931 B.C. (4947 years old). Nevertheless, the expedition has maintained its educational purpose, teaching the students of the Liceo Russell the basics of underwater archaeology and performing with them some test on a low-cost sonar, in order to map part of the lake bottom.

All the operations performed during the two underwater missions are summarized in the slides below, which come from the lesson I gave to the student in order to complete our didactic task at the Liceo B. Russell.

Aknowledgements

Prof. Tiziano Camagna (Liceo Scientifico B. Russell), for organizing the missions

Massimiliano Canossa and Nicola Boninsegna (Nautica Mare Team), for the professional support and for discovering the tree

Mauro Bernabei and the CNR-ivalsa, for analizing and dating the wood samples

The Galazzini family (tenants of the refuge “Città di Trento”), for the logistic support

The wildlife park “Adamello-Brenta” and the Department for Cultural Heritage of Trento (Office of Archaeological Heritage) for close cooperation

Last but not least, Dott. Stefano Agosti, Prof. Giovanni Widmann and the students of Liceo B. Russel: Borghesi daniele, Torresani Isabel, Corazzolla Gianluca, Marinolli Davide, Gervasi Federico, Panizza Anna, Calliari Matteo, Gasperi Massimo, Slanzi Marco, Crotti Leonardo, Pontara Nicola, Stanchina Riccardo